The use of AI at Brandeis: trends, ethics and the future of teaching and learning

As AI becomes an unavoidable consideration in academia, Brandeis students, faculty and staff members learn to navigate its challenges and opportunities.

Has the use of Artificial Intelligence in academia become inevitable? How is Brandeis University dealing with ethical and trust concerns among students and faculty members regarding the use of AI? A small-scale survey and interviews with various members of the Brandeis community provide insights into these questions.

A 2025 Global Student Survey found that four in five students worldwide use generative AI tools for their higher studies, with ChatGPT being the most popular. Generative AI tools are defined as “tools that generate words, images, or sounds in response to prompts with human-like efficiency.” A survey released by the Johns Hopkins University Press shows that 54% of college students use AI tools for writing, 53% for academic assistance and 10% for cheating.

Academic institutions cannot prevent AI use, but they can find ways to integrate it in a way that promotes responsible and ethical use. Colleges and universities in the United States have had different approaches to monitoring AI use in academic settings. At Harvard University, all faculty members are required to have a clear policy regarding the use of generative AI in class. Some example policies include “a maximally restrictive draft policy,” meaning that the use of generative AI tools is forbidden, or the “fully-encouraging draft policy,” which encourages students to explore AI tools in assignments and assessments, as long as their use is communicated.

Northeastern University also acknowledges the use of AI by providing clear policies that students and faculty members should follow. The use of AI tools in research is permitted, but researchers must undergo a review process when dealing with confidential information to address ethical concerns, such as the handling of personal data.

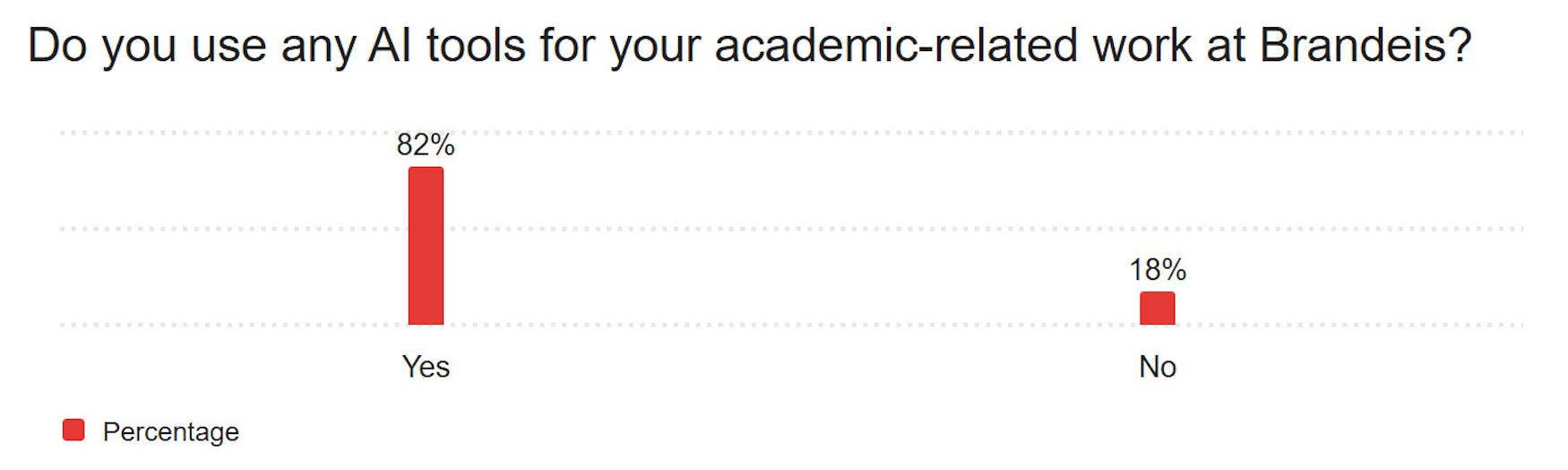

To inform this article, a small-scale survey conducted in January 2026 provides insights into how Brandeis students perceive artificial intelligence. As of press time, the survey received 28 responses from Brandeis undergraduate and graduate students, giving a snapshot of how they use AI and interpret the school’s AI policies. The majority of the respondents were undergraduate students from the classes of 2026–2028, while 14% were graduate students. 82% of the respondents reported using AI for their academic-related work at Brandeis, suggesting widespread use of AI on campus. The most frequently used tool was ChatGPT, used by 96% of these students, followed by Grammarly at 57%.

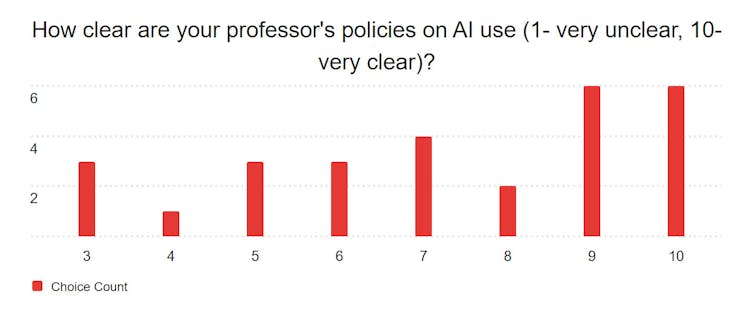

When it came to specific uses of AI tools, students had various responses. Responses indicated moderate to high use of AI tools for research, debugging or generating code and brainstorming for assignments. When asked whether the benefits of AI outweigh the harms, most respondents said “it depends,” suggesting that AI is neither an ally nor an adversary, but rather a resource whose benefit depends on the context. The survey also showed mixed opinions on the clarity of professors’ AI policies, with scores ranging from 3 to 10 (1 meaning very unclear and 10 meaning very clear). This wide range suggests that professors follow individual AI policies rather than a university-wide policy (Figure 1).

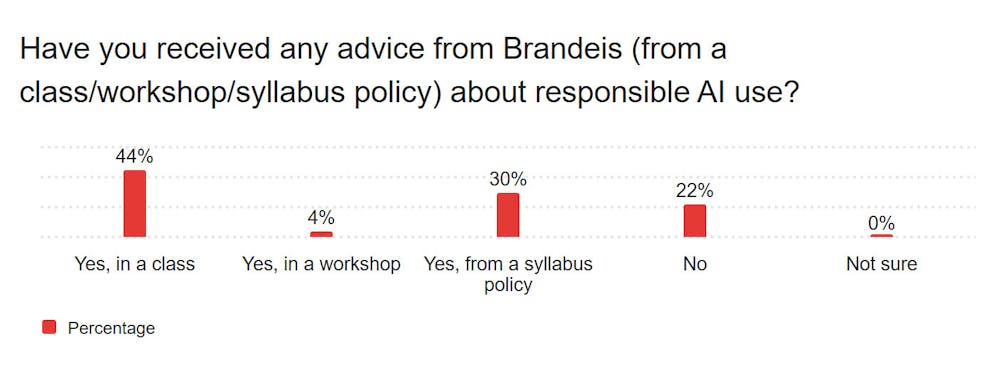

The survey’s section on receiving guidance about the responsible use of AI showcased diverse results (Figure 2). 44% of respondents reported receiving advice on the responsible use of AI in class, 30% through the course’s syllabus and only 4% from workshops. Furthermore, 22% of respondents received no guidance at all. These results suggest that AI literacy workshops may be limited and that students’ guidance varies by course.

Concurrently, 18% of respondents reported not using AI. Some raised concerns that AI threatens entire career fields, such as writing and theater arts, while others reported that learning loses value when a student becomes reliant upon AI. The responses suggest that some Brandeis students have significant concerns about the responsible use of AI and its ethical implications, emphasizing the need for AI literacy on campus.

As part of Brandeis’ efforts to integrate AI into the school curriculum, staff and faculty members worked hard to create a variety of resources for the Brandeis community. Two years ago, the Artificial Intelligence Steering Council was created to promote the responsible and ethical use of AI on campus. The council serves as the University’s central advisory and information-sharing body, and among its responsibilities are reviewing AI policies and monitoring the use of AI in academics and administration. On Brandeis’ website, students and faculty can access a variety of resources on AI literacy, the ethical considerations of AI and effective prompting for generative AI platforms.

Since not everyone may have equal access to technology, prioritizing equity is crucial in academic settings. Matthew Sheehy, one of the co-chairs of the Artificial Intelligence Steering Council and a University librarian, shares that the council works with Student Accessibility Services to ensure equitable access to AI and related technology campus-wide. Sheehy underscored this sentiment, saying, “[i]f you are using technology that you are going to get graded on, every student has to have the same access and the same chance.”

As many students and faculty members are currently skeptical of AI and its implications, Sheehy points out that there is a clear distinction between machine learning and generative AI: “AI has been around since the 80s. What we are talking about now that is really disruptive is generative AI. A lot of our scientists on campus have been using machine learning for decades to do their research. It’s the new form of AI that we are trying to wrap our heads around.” As AI tools continue to evolve, Brandeis faces the challenge of adapting efficient, university-wide policies given the complex nature of AI. As Sheehy sees it, the goal on campus is not to restrict or limit the use of AI, but to ensure it’s used in a thoughtful and ethical way. Since last year, the Brandeis Library has hosted a supercomputer that can run one’s AI instances for experimentation and exploration.

Faculty members and students at Brandeis also have access to another quality resource for AI literacy, The Center for Teaching and Learning. The CTL’s purpose is to support students and instructors in creating the best possible experience at Brandeis. Adam Beaver, the director of the CTL, shares that some of his roles include supporting faculty members, training Teaching Assistants and Community Advisors and providing professional development.

Beaver noted a different perspective on the use of AI, explaining why many students today tend to turn to AI for assistance.“Helping students develop a comfort with discomfort and a comfort with not knowing what the end result of their learning is going to be, but to be intrinsically motivated, rather than motivated just by success or the grade at the end, I think, is probably one of the most important things we can do to keep people using AI,” said Beaver. Even though AI tools provide stability and confidence to students, Beaver emphasized that uncertainty and risk-taking in the learning process matter more than the final result.

Additionally, Beaver said that Brandeis’ lack of a university-wide policy is intentional, allowing professors to determine the right approach to AI based on context. As AI might be extremely beneficial for some classes, such as Computer Science courses, and less relevant to others, individual AI policies can be seen as a strength. On the other hand, there are some pitfalls, such as confusion among students about when and how AI use is allowed in classes. Beaver discussed an important distinction between “learning to write and writing to learn.” Resources such as the Writing Center aim to help students write to learn rather than learn to write. In doing so, students experience the value of learning beyond AI tools that sometimes do the thinking for them.

As part of integrating AI into academia, it is vital to consider its ethical implications. Ezra Tefera, Program Director of the Racial Justice x Technology Policy program at the Heller School, has extensive experience researching social injustice in AI and technology policy. Dr. Tefera shared that RJxTP supports the responsible and ethical use of AI among different community members through courses, workshops and mentoring that teach critical thinking skills. The learning evaluation of the program for 2022-2025 showed that 92% reported “increased subject-matter confidence in post-session surveys.”

Dr. Tefera also serves on Brandeis’ AI Task Force, which aims to “build shared expectations and guardrails around AI across teaching, research, and administration, with real attention to academic integrity, intellectual property and data security, and with an equity lens that doesn’t treat harms as an afterthought,” he shared. He also identifies five ethical challenges that Brandeis community members face with the increased use of AI: academic integrity, privacy and data security, research integrity, intellectual property and ownership and equity. The University addresses these challenges through established general guidance on the responsible use of AI, and faculty members are actively encouraged to make their AI policies clear in their syllabi.

To address confusion about professors’ AI policies, there is ongoing work to align AI best practices among community members so that responsible AI use can be promoted everywhere, regardless of instructor or employer. Different community members have varying opinions about the use of AI based on how confident they feel about their AI literacy. “Across all of these groups, the strongest dividing line isn’t 'pro' versus 'anti.’ It’s whether someone feels they have clear guidance, enough literacy to use AI without guesswork, and confidence that the university is building norms that protect learning, privacy, and equity at the same time,” Dr Tefera noted.

Since 2022, the University has offered a total of three courses that explicitly mention artificial intelligence in their title. One such course, “Generative AI Foundations for Business,” is taught by Prof. Shubhranshu Shekhar (BUS). One of Prof. Shekhar’s interests is the applications of machine learning to real-world decision-making. His course encourages students to use current AI tools to learn, brainstorm, and research.

As most courses currently discourage students from using AI, fearing they might use it to cheat, Prof. Shekhar’s course aims to teach best practices for using AI, including its ethical implications. His advice for students is to make sure they have strong AI literacy today. Shekhar shared that “[e]veryone should build an AI literacy akin to computer literacy, or internet literacy. Awareness will help each one see how to best utilize AI for their own benefit.”

Another relevant course at Brandeis is Prof. Adriana Lacy’s (JOUR) AI in Journalism class. Outside of the classroom, Prof. Lacy is also the CEO and Founder of Field Nine Group, and her research interests focus on how newsrooms and media organizations can adopt new technologies responsibly. Her course allows students to use AI tools during the semester to learn about AI-assisted research, audience analytics and ethical considerations, such as bias in training data.

Prof. Lacy said that many people fear that AI can replace humans entirely, but in many fields, such as journalism, the human role remains crucial. “AI is a tool that can accelerate workflows, but it still requires a human with expertise to verify, contextualize and make ethical decisions about what gets published.”

Moreover, Prof. Lacy emphasized the need for AI literacy today. She believes it is important that people understand how AI tools work so they can use them efficiently and ethically when they have to. Even though AI can help save time for specific tasks, critical thinking and ethical reasoning should always come from the individual. As technology continues to evolve, she notes, adaptability is a crucial skill for students to have in order to use new tools responsibly.

Some students at Brandeis use AI tools consistently, while others refuse to use them or try to limit their use as much as possible. Alyssa Golden ’26 is one of two students serving on the Artificial Intelligence Steering Committee and is also the student representative to the Board of Trustees. She noted that some professors seem to have moved from assigning take-home papers to in-class writing exams and more oral presentations. As AI-detection tools are still new and flawed, professors fear they might not be able to detect the use of generative AI on their own. Therefore, the need for students to be AI-literate and to adhere to clear university-wide AI policies has become more crucial than ever.

Golden said she has long refused to use ChatGPT as she wants to go to law school and is concerned about intellectual property issues when using AI tools. However, a conversation with her twin brother, who is minoring in Artificial Intelligence at Emory University, changed her perspective.

Golden explained, “He said to me, ‘Well, you can sit and have your concerns, Alyssa, or you can sit and learn how to use AI, like the rest of the world is doing. Because if you sit and say to yourself you are not going to use it ever, eventually you will be way behind, and everyone will be using it.’While some students at Brandeis still have concerns regarding the use of AI, other students have learned to use AI regularly in a responsible and effective way. Rezhan Fatah ’27, one of the Undergraduate Department Representatives in the Computer Science department, has learned to integrate AI into his everyday life. Recently, he participated in the Remark X Google DeepMind hackathon, where his team won third place for their project, which used Google’s newest Image AI models to create a better online shopping experience.

Fatah shared that he is an advocate for the responsible and ethical use of AI. “I love using AI to manage my inbox, calendar, and even my notes. One of my favorite AI tools is ChatGPT’s study mode, where I instruct it to never give me answers to problems, but rather point me in the right direction, and help me understand critical concepts.” When AI tools are used thoughtfully and task-specifically to support the learning process, they can be powerful assistants to students. Fatah said that AI tools are meant to make the learning process easier, not to replace one’s work entirely. He emphasized the importance of AI literacy among the Brandeis community, as he believes AI is part of our lives now, and it is in our own best interest to learn to use it responsibly.

As artificial intelligence becomes a permanent part of academic life, all members of the Brandeis community will have to engage with AI tools in one way or another. The University has committed to promoting responsible and ethical use of AI through the creation of the Artificial Intelligence Steering Committee and AI literacy initiatives, led by the CTL. A university-wide AI policy is not in place, which is why professors’ expectations regarding AI vary from class to class.

The small-scale survey and interviews show that AI tools are widely used for a variety of purposes. Instead of fully excluding AI from classes, Brandeis is challenged to expand AI literacy opportunities and promote the ethical use of AI through initiatives such as AI literacy workshops. Ultimately, the benefits and harms of AI depend entirely on how it is used: either as a tool for academic dishonesty or academic success.

Please note All comments are eligible for publication in The Justice.